Table of Contents

Understanding the Context: From Static Overlays to Intelligent Experiences

As augmented reality (AR) matures, the industry’s focus is shifting from merely overlaying digital imagery onto the physical world to creating experiences that respond intelligently to context. Early AR applications often felt static: virtual objects were placed in a scene, but they neither understood their surroundings nor adapted to the user’s intent. Now, advances in machine learning, computer vision, and edge computing are unlocking the potential for AR systems that not only “see” the environment, but interpret it, learn from it, and predict what the user might need next.

This transformation is not happening in a vacuum. The proliferation of image sensors, depth cameras, and lidar scanners in AR headsets and smartphones provides a rich stream of spatial data. Machine learning algorithms can parse this data to identify objects, categorize scenes, and gauge user behavior. Contextual awareness—the system’s ability to know whether you’re in a kitchen, a classroom, or a factory floor—becomes the foundation for delivering tailored content and simplifying user interactions.

For AR to achieve mainstream utility, the technology must feel natural, unobtrusive, and genuinely helpful. Think of it this way: users don’t just want digital overlays; they want guidance, shortcuts, and relevant information offered at the right time, in the right place. Consider a field technician troubleshooting a complex machine. With a context-aware AR interface, the device could recognize the components in front of the user, highlight relevant instruction manuals, predict what tool they’ll need next, and even provide a step-by-step procedure. Or imagine a shopper in a store: as they browse, the AR interface recognizes products, displays price comparisons, and suggests complementary items—entirely without the user having to navigate menus.

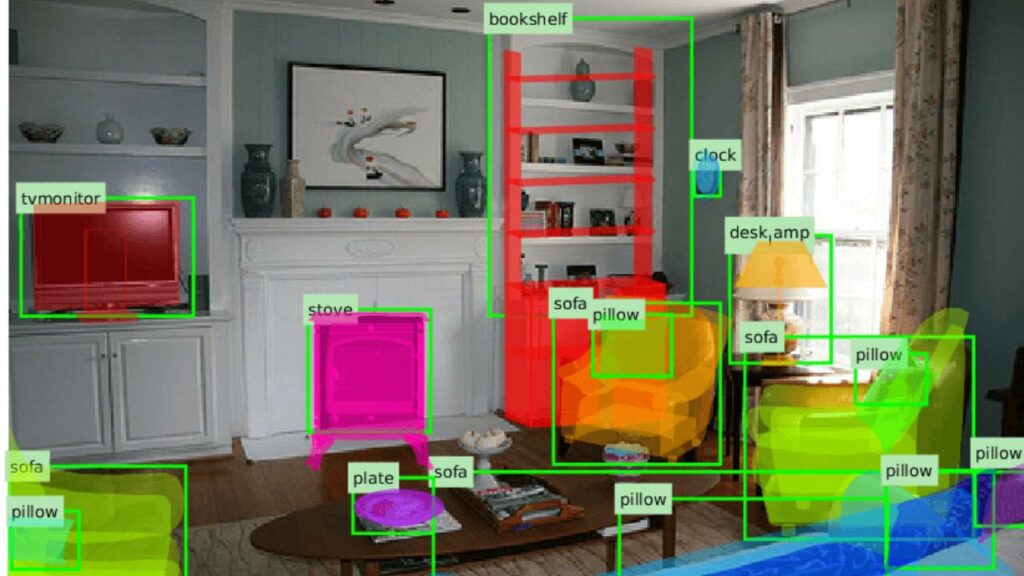

Artificial intelligence supercharges this contextual understanding. Deep learning models trained on vast image datasets can identify everyday objects with impressive accuracy and speed. Semantic segmentation algorithms break down the scene into meaningfully labeled categories: “table,” “door,” “person,” “machine part.” Meanwhile, predictive analytics and recommender systems use patterns in user behavior to anticipate what content or action would be most helpful. Combined, these capabilities turn AR from a novelty into a dynamic assistant that adapts continuously.

Additionally, contextual and AI-driven interactions can empower new input methods. Beyond gesture and voice commands, developers are experimenting with gaze tracking, haptic feedback, and text input solutions such as universal virtual keyboards that appear when most needed. These interfaces improve as the system better understands not just the user’s immediate actions but their underlying intentions. If the AR system knows you’re reading instructions to repair a part, it might automatically bring up the relevant keyboard layout when it predicts you’ll want to add a note or query a parts database.

This evolution depends on robust privacy and data handling mechanisms. After all, AI-driven AR involves continuous observation of a user’s environment and potentially sensitive personal cues. As engineers refine these algorithms and integrate them into consumer devices, they must adopt privacy-by-design principles and comply with emerging standards that govern spatial data. Interoperability and ethical considerations ensure that these powerful AI tools build trust rather than erode it.

Key Technologies and Techniques: Computer Vision, Scene Understanding, and Predictive Analytics

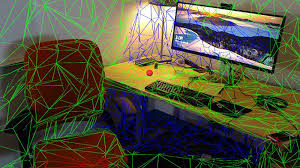

For AR devices to become context-savvy, several technical elements must work in concert. Machine learning and computer vision form the backbone, but additional techniques—ranging from sensor fusion to on-device neural networks—play pivotal roles.

Computer Vision and Object Recognition

At the heart of contextual AR lies computer vision, the science of enabling machines to interpret and understand the visual world. Convolutional neural networks (CNNs) and Transformer-based vision models have dramatically improved object detection and classification. Given a live camera feed, these models can identify whether the user is looking at a car engine, a surgical instrument, or a piece of furniture. By linking recognized objects to digital resources—such as manuals, training videos, or schematics—the AR system becomes a powerful contextual search engine, invoked simply by looking around.

This capability is invaluable in industrial and enterprise scenarios. A maintenance technician can point their headset at a piece of machinery; the AR system’s computer vision algorithms identify the part and overlay live sensor readings, maintenance history, and repair instructions. In medical training, a student wearing AR glasses can look at anatomical models or even a live patient, triggering overlays that label organs, bones, or blood vessels, aiding in both learning and diagnostics.

Semantic Scene Understanding and Spatial Mapping

Object recognition alone isn’t enough. For truly contextual AR, the system must understand spatial relationships and the semantics of entire scenes. Semantic segmentation algorithms break down a camera’s field-of-view into distinct labels, effectively giving the device a structured understanding of the environment. It can differentiate walls from floors, tables from chairs, tools from spare parts. With additional spatial mapping techniques—such as simultaneous localization and mapping (SLAM)—the AR device can reconstruct a 3D representation of its environment, anchoring digital content accurately and persistently.

Armed with this semantic map, the AR system can, for example, know that a certain holographic overlay should be placed on a table rather than floating awkwardly in midair. It can also infer user context: if it sees an assembly line and certain patterns of tools, it might guess the user is performing a repetitive task and offer shortcuts or predictive step-by-step instructions. Over time, this level of understanding allows AR experiences to feel less like a digital sticker book and more like a thoughtful collaborator aware of its environment.

Predictive Analytics and Machine Learning on the Edge

Beyond object and scene recognition, predictive analytics refines the user experience. By analyzing patterns in user behavior, interaction histories, and environmental cues, the AR system can forecast user needs. Suppose a user frequently searches for part numbers after identifying a machine. Over time, the AR interface might proactively surface part information and a text input field right when the user approaches that machine, preempting the need for manual navigation.

Executing these models efficiently often means running inference locally (“on the edge”) rather than relying solely on cloud computation. On-device AI reduces latency, ensuring that the experience remains responsive even when network connections fluctuate. Recent advances in model compression, quantization, and edge-optimized neural accelerators enable devices to run complex vision and language models locally, preserving user privacy and maintaining snappy interactions. The result is an AR headset that can intelligently annotate the world in real-time, anticipate user queries, and adapt to changing situations, all without stalling or lagging.

Contextual Awareness and Multimodal Input

As AR systems become smarter, they also become more adept at handling multimodal input. Voice commands can be contextual: if the user says “Show me the manual,” the AR system knows to display the manual of the object currently in view. Gaze tracking data can inform the AR interface which part of the scene holds the user’s interest, prompting it to surface relevant information automatically. Gesture recognition complements these inputs, allowing users to select, manipulate, or annotate holographic objects with intuitive hand movements.

Universal virtual keyboards and other text input solutions also benefit from contextual intelligence. When the AR system detects that the user is focusing on a text field—perhaps to log an action or search for instructions—it can present a simplified, domain-specific keyboard layout. If the user is working in a medical context, the keyboard might highlight medical terminology; if they’re browsing a parts catalog, the interface might offer predictive suggestions based on previous queries. These subtle shifts in interface elements reduce cognitive load and keep the user engaged in the task at hand rather than wrestling with controls.

From Niche Applications to Ecosystem Evolution: The Future Impact of Contextual, AI-Driven AR

As AI-driven, context-aware AR matures, its influence will extend beyond specialized applications into everyday life. The early adopters—enterprise, healthcare, education, and high-skill verticals—are already benefiting from enhanced efficiency, better training, and reduced error rates. Eventually, as standards coalesce and hardware costs drop, these innovations will trickle into consumer markets and spawn a new generation of AR apps that feel indispensable rather than superfluous.

Enterprise and Industrial Applications

In industrial settings, AI-driven AR can streamline complex workflows. Technicians can receive step-by-step guidance tailored to the specific machines they’re viewing. Logistics staff can quickly scan a warehouse, and the AR system highlights inventory locations, recommends optimal picking routes, and even identifies out-of-place items. The result is more efficient operations, reduced training times, and fewer mistakes.

In fields like architecture and construction, contextual AR can overlay digital blueprints that adjust automatically based on the user’s viewpoint, helping teams visualize structural elements before committing to costly physical changes. Engineers can inspect prototypes using AR headsets that not only label components but also predict the next piece of data or tool the user will need, removing friction from the design process.

Healthcare and Education

Medical professionals can benefit from AR that identifies anatomical structures or surgical instruments in real-time. A surgeon wearing AR glasses might look at an MRI scan superimposed on a patient’s body, while the system highlights key structures and suggests procedures. Over time, as the system observes patterns in the surgeon’s workflow, it can adapt its interface—displaying certain annotations more prominently or recalling the surgeon’s preferred reference materials at the right moment.

In education, AR textbooks can come alive. Students can point their AR-enabled devices at a science poster, and the system identifies concepts, provides definitions, and even recommends related interactive modules. By analyzing student behavior—where they pause, what they revisit—the AR application can tailor its teaching strategy. AI-driven context ensures that learning content isn’t just passively displayed; it’s actively tuned to each learner’s needs and curiosities.

Retail, Hospitality, and Consumer Domains

Imagine stepping into a grocery store and wearing AR glasses that recognize products, offering personalized nutritional information and recipe suggestions based on your dietary preferences. As you browse, the AR system learns your patterns, perhaps noticing that you frequently compare unit prices. The next time you pick up a product, it proactively displays cost comparisons and discount alerts.

In hospitality, AR-guided tours can identify landmarks, share historical insights, and translate foreign signage on the fly. For casual home use, an AI-driven AR assistant might recognize rooms and furniture, helping you visualize how new décor items would look or suggesting maintenance tips at the moment you approach a leaky faucet. Over time, what begins as a novelty—receiving contextual overlays at a museum—evolves into a fundamental interface for navigating daily life.

Challenges and Considerations: Ethics, Privacy, and Data Stewardship

For all its potential, AI-driven contextual AR raises pressing ethical and privacy questions. Systems that continuously scan and interpret a user’s environment are, by necessity, collecting a wealth of visual data—images of private homes, personal possessions, and the faces of bystanders. Without careful governance, this data could be misused, leading to surveillance concerns, unwanted profiling, or unauthorized sharing.

Developers and regulators must collaborate to establish clear guidelines. Privacy-by-design techniques can ensure sensitive data is processed locally and not stored indefinitely. Federated learning—where models improve collectively without centralizing raw data—could help maintain user privacy while still refining AI capabilities. Encryption, access controls, and secure authentication methods can prevent unauthorized parties from tapping into the AR device’s data stream.

Ethical considerations also extend to how AR systems present information. When algorithms highlight certain products or prioritize specific data, what are the implications for user choice? If predictive analytics become too aggressive, the user may feel steered toward certain actions rather than informed. Striking the right balance requires transparent algorithms, user-centric design, and the ability for individuals to override or customize recommendations.

The Interoperability Factor and Emerging Standards

As contextual and AI-driven AR applications proliferate, interoperability will become critical. In a future where multiple AR devices, services, and platforms coexist, a user might start a task on one headset and continue on another. Ensuring that semantic understanding, object recognition models, and user preferences carry over seamlessly requires standardized data schemas, APIs, and communication protocols.

Industry groups, standards bodies, and open-source initiatives are already working to define common frameworks for spatial data and scene semantics. AI model formats like ONNX (Open Neural Network Exchange) can help ensure that trained models run consistently across different hardware platforms. Interoperability in contextual AR ensures a level playing field for developers and protects users from vendor lock-in. Over time, this harmonization leads to an ecosystem where AR apps, regardless of who builds them, communicate effectively and respect user privacy and preferences.

User Interface Evolution and the Role of Text Input

As AR becomes more context-aware, user interfaces will evolve beyond the static buttons and menus of early applications. Contextual triggers—like looking at a particular object or being in a specific location—will replace manual commands. However, users will still need methods to input custom information. In many scenarios, especially in enterprise or complex workflows, the ability to input text remains crucial. Enter the universal virtual keyboard and other text input solutions.

With AI-driven context, the AR system can predict when text input is necessary and tailor the keyboard layout accordingly. For a technician looking at a machine part, the keyboard might highlight commonly used part numbers or maintenance terms. For a medical practitioner examining a patient, the keyboard might prioritize medical abbreviations and ICD codes. By combining predictive analytics with a user’s context, text input becomes smoother, faster, and more meaningful. Instead of forcing the user to navigate lengthy menus, the system intelligently surfaces the exact words or phrases that might be relevant, bridging the gap between traditional computing activities and immersive AR experiences.

A Glimpse into the Future: Ambient, Adaptive AR

In the coming years, we may witness AR systems that feel less like technology and more like a subtle layer of cognitive augmentation. The headset or glasses, powered by advanced AI models, will quietly observe our actions and environment, stepping in when needed to provide guidance or streamline tasks. Over time, these systems might develop a nuanced understanding of individual users—their preferences, their routines, their skill levels—and adapt accordingly.

For example, a novice technician might receive detailed, step-by-step guidance with rich visual overlays, while an experienced professional sees only occasional prompts or shortcuts. Students could progress through AR-enabled lessons that adjust complexity based on their demonstrated mastery. Shoppers might gradually see less generic advertising and more genuinely helpful recommendations. This personalization arises naturally from contextual and AI-driven interactions, as the AR system refines its models through continuous learning.

Ultimately, contextual intelligence in AR isn’t about creating flashy demos or one-off gimmicks. It’s about making technology more human-centric—minimizing friction, reducing the cognitive load of navigating virtual menus, and surfacing information precisely when it’s needed. As developers, researchers, and product designers continue to push the boundaries of what machine learning and computer vision can achieve, AR will evolve from a static overlay into a fluid, intelligent medium that enriches our understanding of the world and helps us make better decisions.